| Kushagra Srivastava*1 | Rutwik Kulkarni*1 | Manoj Velmurugan*1 | Nitin J. Sanket1 |

* Equal Contribution

1 Perception and Autonomous Robotics Group (PeAR)Autonomous aerial robots are becoming commonplace in our lives. Hands-on aerial robotics courses are pivotal in training the next-generation workforce to meet the growing market demands. Such an efficient and compelling course depends on a reliable testbed. In this paper, we present VizFlyt, an open-source perception-centric Hardware-In-The-Loop (HITL) photorealistic testing framework for aerial robotics courses. We utilize pose from an external localization system to hallucinate real-time and photorealistic visual sensors using 3D Gaussian Splatting. This enables stress-free testing of autonomy algorithms on aerial robots without the risk of crashing into obstacles. We achieve over 100Hz of system update rate. Lastly, we build upon our past experiences of offering hands-on aerial robotics courses and propose a new open-source and open-hardware curriculum based on VizFlyt for the future. We test our framework on various course projects in real-world HITL experiments and present the results showing the efficacy of such a system and its large potential use cases.

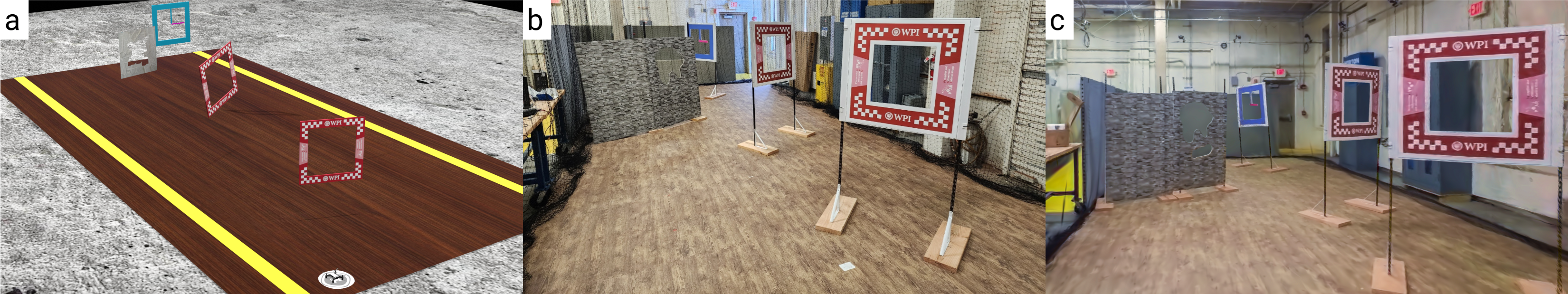

Figure: Evolution of perception-centric offerings of the course. (a) course based on simulation, (b) course based on a

real-world obstacle course, and (c) new course based on the proposed VizFlyt framework, where the image is a real-time

photorealistic render of a hallucinated camera on an aerial robot used for autonomy tasks. All the images in this paper are

best viewed in color on a computer screen at 200% zoom.

| Paper | Github | Cite |

Perception and Autonomous Robotics Group

Worcester Polytechnic Institute

Copyright © 2024

Website based on Colorlib