We work on a lot of areas with the common theme of pushing the boundaries of what is possible on tiny aerial robots using only on-board sensing and computation. Our vision is to utilize these tiny robots for the greater good.

We work on camera-based navigation algorithms for aerial robots. The goal is to develop a fully functional system that can operate in the wild without the need for any external infrastructure such as GPS or motion capture.

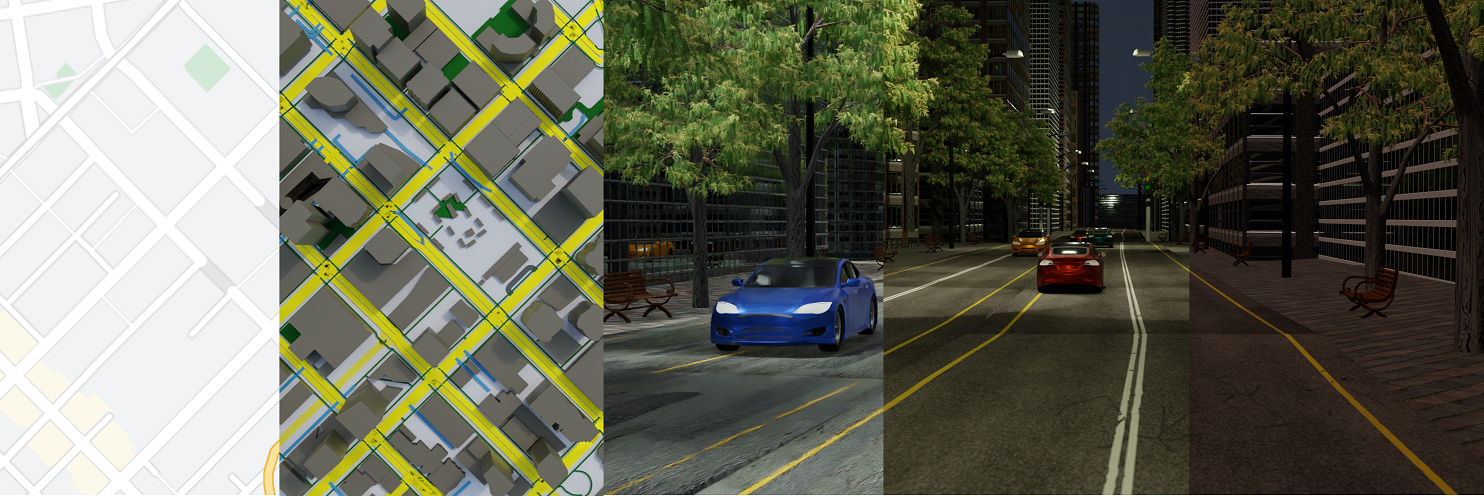

We work extensively on the perception stack for autonomous robots. Most of these perception modules are made of deep learning and require high quality and large amounts of data which are often not possible. To this end, we propose methods to leverage simulation engines for automatic high-fidelity data generation.

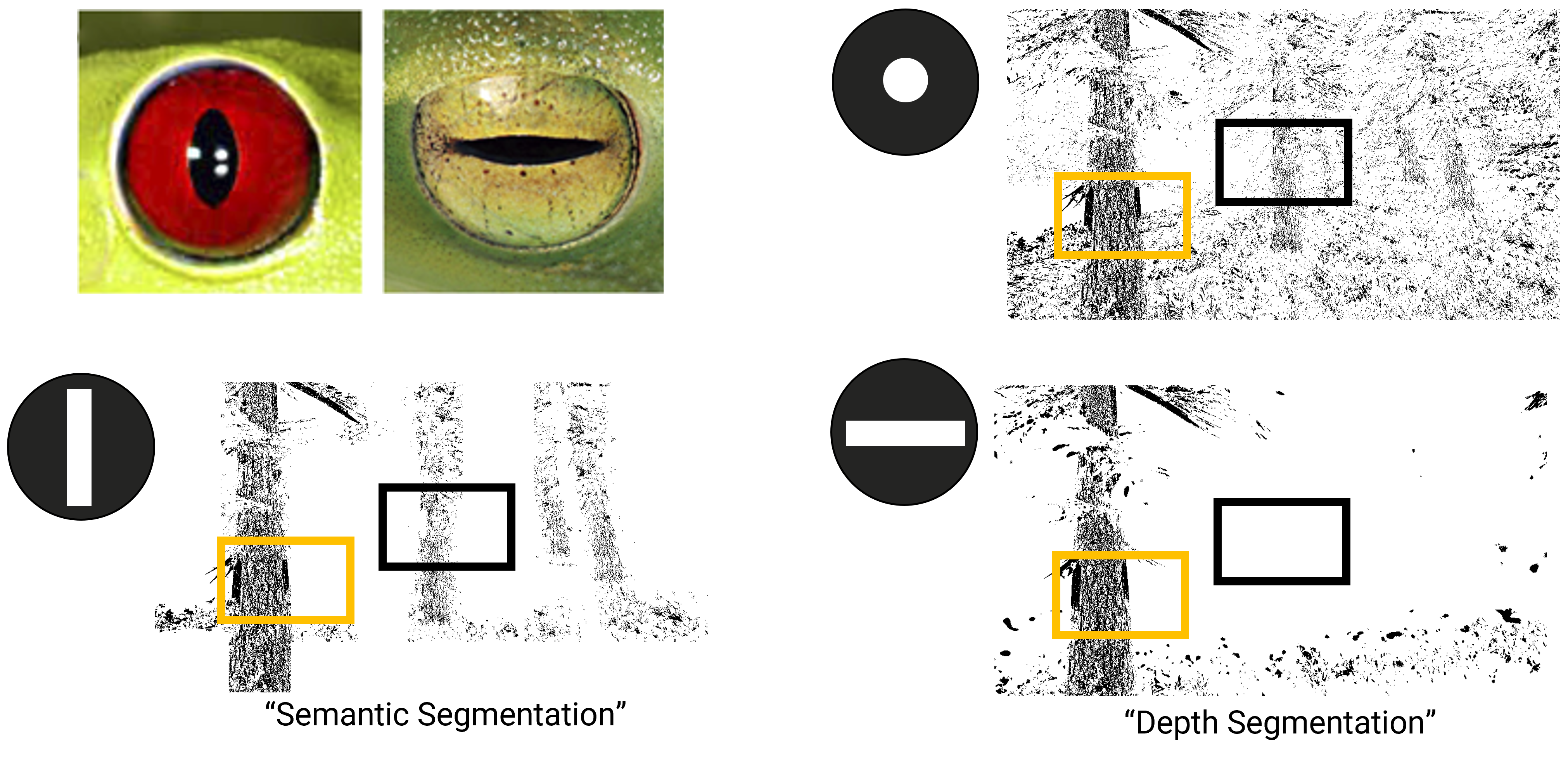

Recent advances in Deep learning have become the gold standard for perception on robots. With the advent of accelerated computing at small footprint, they have become the de facto standard for our drones. We work on tackling the achilles heel of deep learning systems, namely, explainability, uncertainty quantification and generalization ability.

When building tiny autonomous robots, one needs to re-imagine the sensor suite and go back to the drawing board to propose novel ways to receive sensor information and process them. We work on the physical modelling using mathematical tools from computational imaging to push the boundaries of what is possible with minimal amount of sensing and perception.

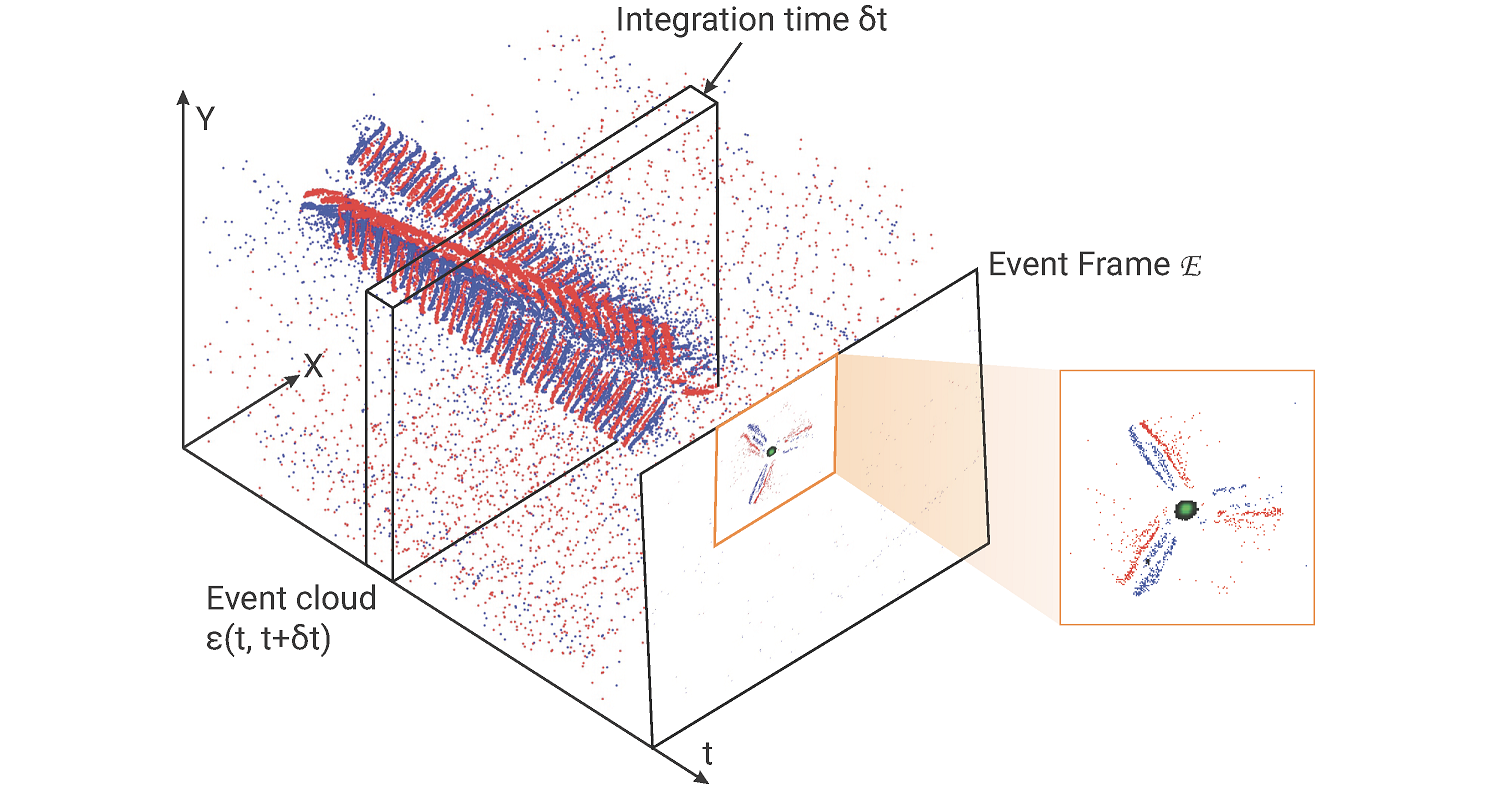

Neuromorphic Event-based sensors are bio-inspired sensors that work like our eyes. Instead of utilizing entire frames, they only transmit pixel-level intensity changes in light caused by movement of the scene or the camera called events. These events have a high dynamic range, low latency (order of micoseconds) and no motion blur. We couple this sensor with Neuromorphic neural networks called Spiking Neural Networks for perception and autonomy.

Bee populations have been steadily declining and we have lost about 40% of the bees in the last decade. To aid these conservation efforts, we are working on supplementing bees with artificial bees for pollination and to monitor crops.

Obtaining 6-DoF pose and maps are pivotal in accurate navigation and surveying. We work on leveraging the latest neural rendering methods and sensor fusion approaches based on deep learning to enhance the efficacy of such systems.

Perception and Autonomous Robotics Group

Worcester Polytechnic Institute

Copyright © 2023

Website based on Colorlib