We are hiring multiple talented Masters students for Directed Research/Thesis and Undergraduates for MQPs in the field of aerial robotics and Computer Vision/Robot Perception.

Come push the boundaries of what is possible on tiny robots with us!

We are currently hiring in four project domains for

multiple Masters and Undergraduate student positions:

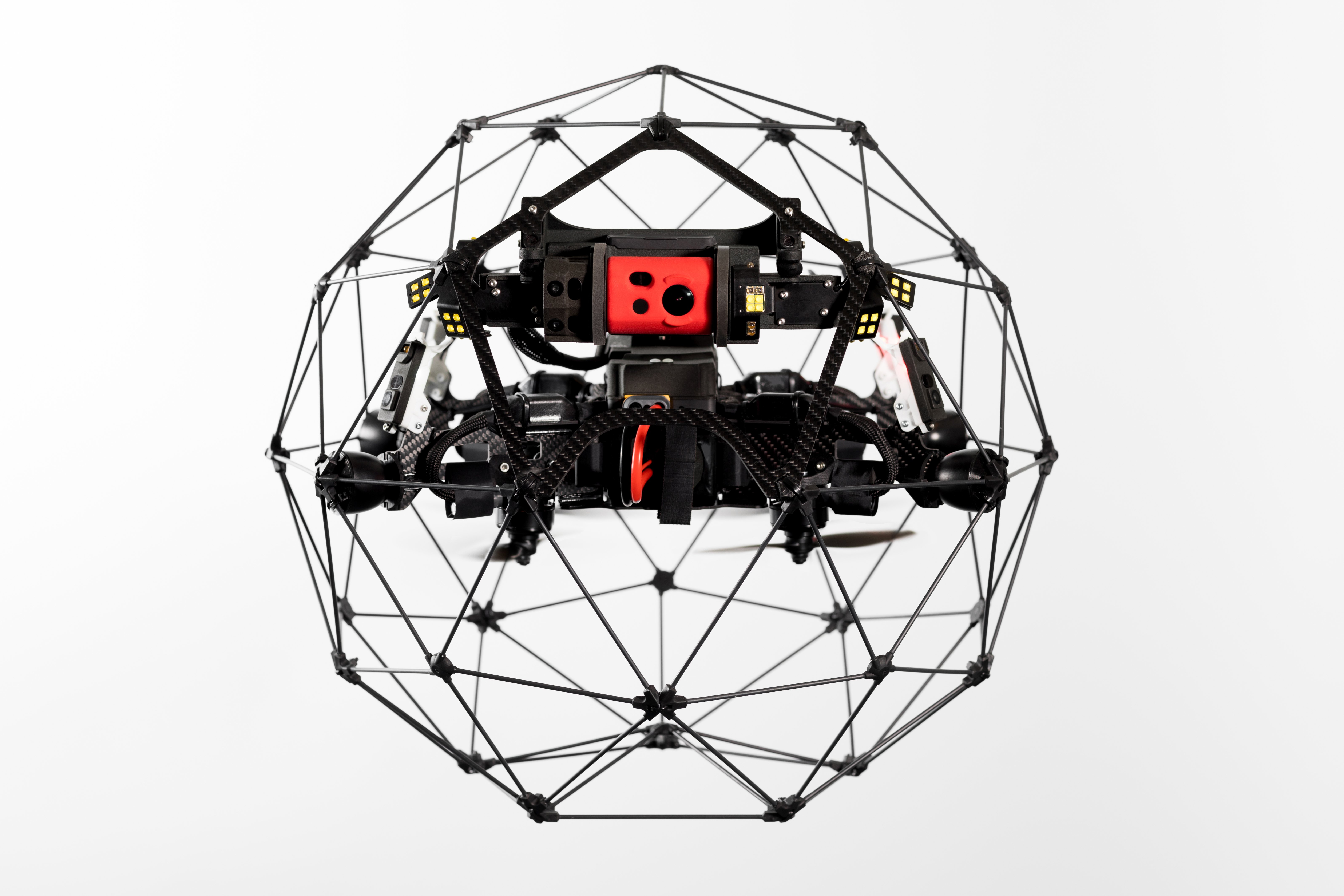

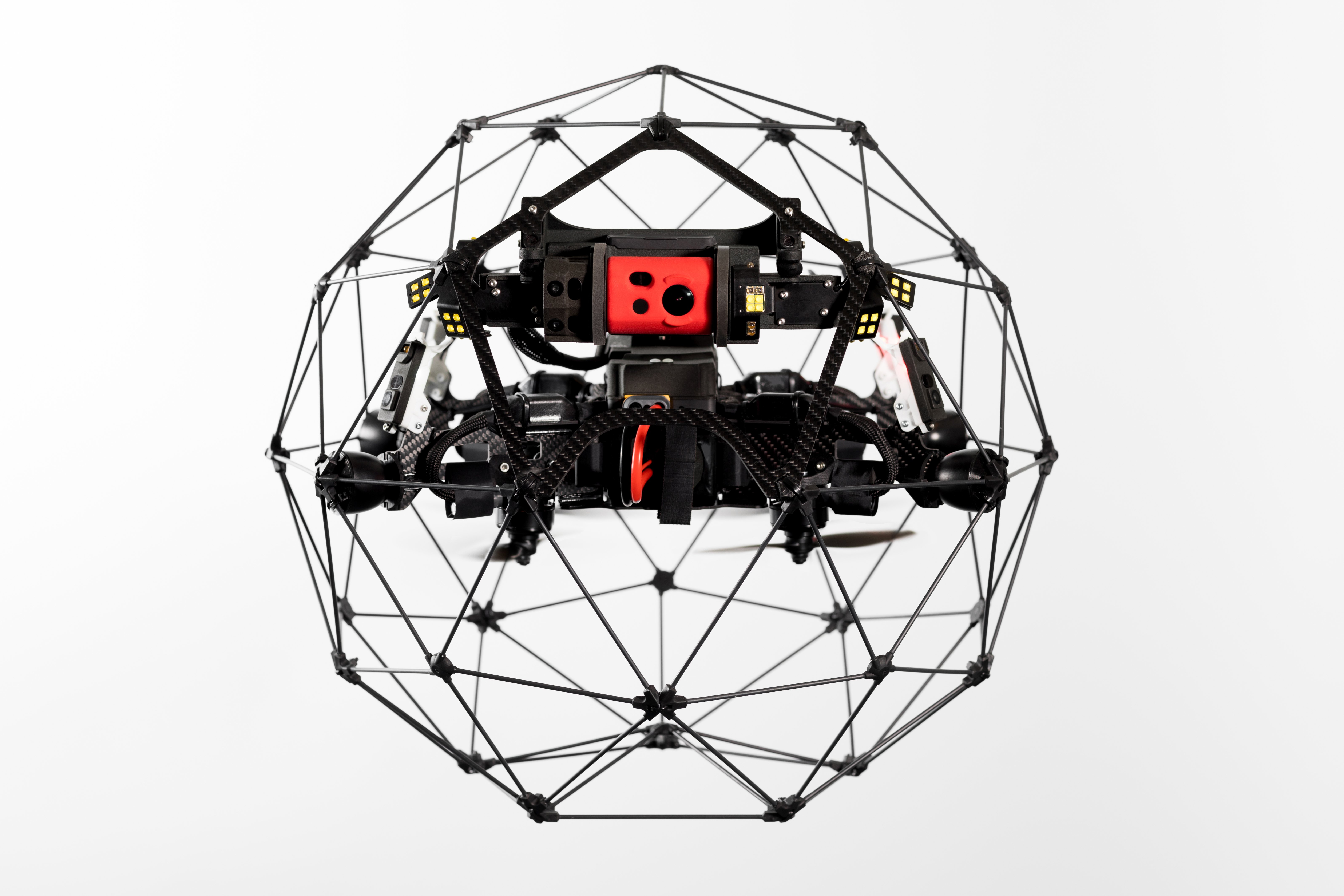

High-speed collision tolerant drone

Design and test a safety cage for a high-speed drone flying at 10 to 30 m/s to leverage collisions to traverse through a cluttered environment with perception. The project involves the design of a safety cage for a 150mm CF frame drone with a depth camera and Raspberry Pi 4B+ for onboard processing.

Keywords: CAD, ROS, Python, C++, Depth Camera, Obstacle avoidance, Perception, SLAM, Odometry

High-speed depth-based autonomous flight through cluttered environments

Design and implement an autonomous drone system capable of maintaining an average speed of 5 m/s while navigating through an unknown indoor environment while avoiding obstacles using a depth camera within a quadrotor diagonal wheelbase size of 210mm. The project involves the design and integration of a 210mm CF frame drone with a depth camera and Jetson Orin for onboard processing. The obstacle avoidance algorithm should run at a rate of atleast 50Hz, providing real-time responsiveness.

Keywords: ROS, Python, C++, Depth Camera, Obstacle avoidance, Perception, SLAM, Odometry

Autonomous Flying Ball Catcher

Develop an autonomous drone equipped with a Jetson Orin Nano and Intel RealSense Depth Camera to catch a ball. In the first phase, integrate the Vicon Motion Capture System for accurate positioning during flight tests. In the second phase, implement ball detection, tracking, and prediction logic on the Jetson, overcoming challenges of intermittent visibility and noisy detections. The goal is to create a robust system that can navigate to a reachable spot and catch the ball with precision in real-world scenarios.

Keywords: Object Detection, Tracking, Computer Vision, Motion Capture, onboard computing, Aerial Robotics

Flight Googles For Sim2Real2Sim

Create a system for simulating a drone's vision system in complex scenarios within the VICON motion capture setup. Implement pose dependent RGB camera rendering (using Gaussian Splatting or NeRFs or real-time rendering) to accurately mimic the drone's perspective. Transmit simulated images to the Jetson onboard computer for real-time control, enabling dynamic and responsive drone navigation in simulated complex environments.

Morphable aerial robot navigation through tight spaces

Design and build a quadrotor featuring foldable arms actuated by servo motors, accommodating a Jetson onboard computer and Intel RealSense Camera. Tune the quadrotor for stable flight in both folded and expanded configurations, implementing real-time gain adjustments for midair folding. The flight path or hover height must not be compromised while folding the arms. Demonstrate the system's capability to navigate narrow gaps using depth camera feedback.

How do I apply?

For Masters thesis/directed research/independent study applications follow the steps described

here.

These positions are ONLY for current WPI students.

For undergraduate research/MQP follow the steps described

here.

These positions are ONLY for current WPI students.

IMPORTANT: Recommendation letters are not required at the first stage but if you have them already, feel free to upload them in the application form. In case you are selected for an online/in-person interview and you hear a positive response, recommendation letters will be requested when you apply through the application portal (for Ph.D. applications) and through email (for Masters and Undergraduate applications).